- Community Home

- Developer Blogs

- Threat models at the speed of DevOps

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Security at scale is paramount at Marqeta.

Our technology team moves fast, and the application security team has to keep up. We make use of automated testing, but we know that nothing can replace an application review by a skilled security specialist. We also believe in a self-service model that enables product teams to take ownership of the security of their applications.

In addition to implementing static and dynamic application security tests in our build pipelines, we are constantly looking for ways to automate our own processes. Automating the process surrounding an application review increases both the quality of our reviews (more time to focus on what matters) as well as our bandwidth to take on additional reviews.

Recently, we have been focused on automating some of the processes around building threat models.

Like many other AppSec teams, we heavily utilize threat modeling at Marqeta. Building a threat model for each application we audit provides us with a prioritized listing of threats against a given application. While the person performing the audit will build an in-depth understanding of the application, the threat model allows for easy communication with others who either aren’t as well-versed in security topics or don’t have a strong familiarity with the application under review. One key component to building a threat model is creating a data flow diagram (DFD). This diagram provides a visualization of how data flows throughout an application. It offers insight into the path a piece of data takes through an application… from its origin to its ultimate destination, and every component it touches along the way. A data flow diagram is an invaluable tool for understanding the threats facing an application.

Data flow diagrams are traditionally built in visualization tools like OmniGraffle, LucidChart, and Visio. While tools such as these do a fantastic job of enabling their users to create a workable DFD, they can be tedious to use for large applications with numerous components. Significant time can be lost while adding elements one by one and drawing lines between them, and updating an existing diagram can be painful and error-prone.

Further, DFDs have a lot of crossover with an application’s actual infrastructure. At Marqeta, this infrastructure is typically defined in Terraform templates. DFDs built in tools like LucidChart are far from both the application code and infrastructure templates. One of the principals of the AppSec team at Marqeta is to be close to code and deal in artificacts as much as possible. For these reasons, we decided to start building our DFDs in Terraform using a custom provider.

Today we are releasing two libraries to faciliate the automation of DFD creation. They are both available on Github under an MIT license and written in Golang.

go-dfd

go-dfd is a Go package built on gonum.org’s graphing package. It can be used as a standalone package or with our Terraform provider. It provides an API geared toward building DFDs, and it outputs a Graphviz (DOT) file. Graphviz was chosen due to its simplicity and portability. As we continue to iterate on this package, we will explore other backends as well.

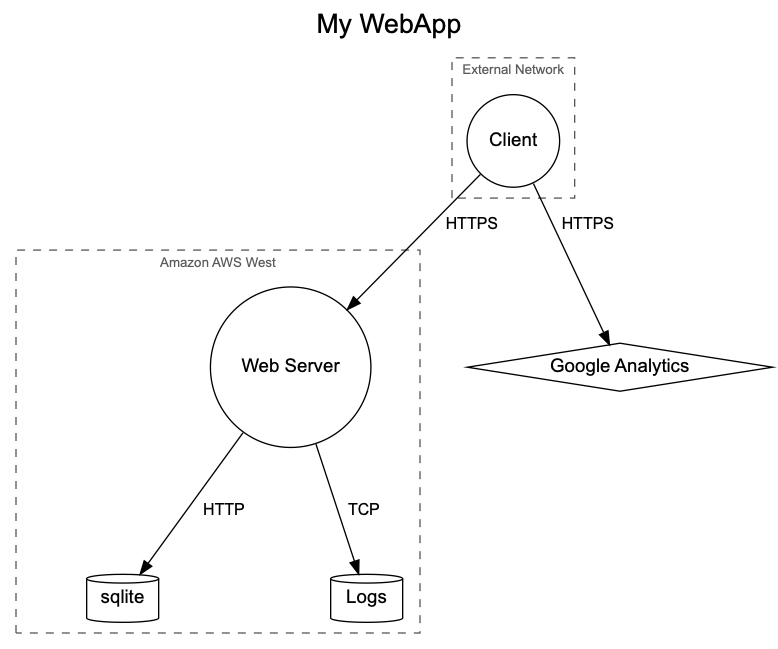

Let’s take a look at a simple example. We need a DFD for a small web application that uses sqlite as its database backend.

package main

import (

dfd "github.com/marqeta/go-dfd/dfd"

)

func main() {

client := dfd.NewClient("/path/to/dfd.dot")

toDOT(client)

}

// You can write out Data Flow Diagram objects to DOT files

func toDOT(client *dfd.Client) {

graph := dfd.InitializeDFD("My WebApp")

google := dfd.NewExternalService("Google Analytics")

graph.AddNodeElem(google)

external_tb, _ := graph.AddTrustBoundary("Browser")

pclient := dfd.NewProcess("Client")

external_tb.AddNodeElem(pclient)

graph.AddFlow(pclient, google, "HTTPS")

aws_tb, _ := graph.AddTrustBoundary("AWS")

ws := dfd.NewProcess("Web Server")

aws_tb.AddNodeElem(ws)

logs := dfd.NewDataStore("Logs")

aws_tb.AddNodeElem(logs)

graph.AddFlow(ws, logs, "TCP")

db := dfd.NewDataStore("sqlite")

aws_tb.AddNodeElem(db)

graph.AddFlow(pclient, ws, "HTTPS")

graph.AddFlow(ws, logs, "HTTPS")

graph.AddFlow(ws, db, "HTTP")

client.DFDToDOT(graph)

}

If you run the above code via go run main.go, it will produce a DOT file at /path/to/dfd.dot. This Graphviz file will look something like the following:

strict digraph 8320840571571852364 {

graph [

label="My WebApp"

fontname="Arial"

fontsize="14"

labelloc="t"

fontsize="20"

nodesep="1"

rankdir="t"

];

node [

fontname="Arial"

fontsize="14"

];

edge [

shape="none"

fontname="Arial"

fontsize="12"

];

subgraph cluster_3099958727017326363 {

graph [

label="External Network"

fontsize="10"

style="dashed"

color="grey35"

fontcolor="grey35"

];

// Node definitions.

process_3677006010347689991 [

label="Client"

shape=circle

];

}

subgraph cluster_3862918028289041540 {

graph [

label="Amazon AWS West"

fontsize="10"

style="dashed"

color="grey35"

fontcolor="grey35"

];

// Node definitions.

datastore_5357599094606810278 [

label="sqlite"

shape=cylinder

];

datastore_8084409724099743465 [

label="Logs"

shape=cylinder

];

process_8758260597333559320 [

label="Web Server"

shape=circle

];

}

// Node definitions.

process_3677006010347689991 [

label="Client"

shape=circle

];

externalservice_4332697472613199435 [

label="Google Analytics"

shape=diamond

];

datastore_5357599094606810278 [

label="sqlite"

shape=cylinder

];

datastore_8084409724099743465 [

label="Logs"

shape=cylinder

];

process_8758260597333559320 [

label="Web Server"

shape=circle

];

// Edge definitions.

process_3677006010347689991 -> externalservice_4332697472613199435 [label=<<table border="0" cellborder="0" cellpadding="2"><tr><td><b>HTTPS</b></td></tr></table>>];

process_3677006010347689991 -> process_8758260597333559320 [label=<<table border="0" cellborder="0" cellpadding="2"><tr><td><b>HTTPS</b></td></tr></table>>];

process_8758260597333559320 -> datastore_5357599094606810278 [label=<<table border="0" cellborder="0" cellpadding="2"><tr><td><b>HTTP</b></td></tr></table>>];

process_8758260597333559320 -> datastore_8084409724099743465 [label=<<table border="0" cellborder="0" cellpadding="2"><tr><td><b>TCP</b></td></tr></table>>];

}

From there, you can render a PNG or PDF file using the Graphviz command line utility to create your DFD.

This is only a simple example, but as the size of your application grows, the ability to automate the creation of DFDs becomes more and more worthwhile. For more information about go-dfd, refer to the README.

terraform-provider-dfd

At Marqeta, we rely heavily on Terraform for managing our infrastructure. Since properties of real infrastructure are often inputs to data flow diagrams, we determined that generating those DFDs within Terraform could lead to efficiency gains as well as helping to promote the idea of creating DFDs alongside the infrastructure. In AWS terms, things like EC2 instances, security groups, subnets, and CloudWatch (among many other components) align closely with the types of elements represented in a DFD. With Terraform templates, we can quickly create and modify DFDs as well as provide a reviewable, portable artifact. Our Terraform provider uses the go-dfd package as its backend. Let’s build a DFD for the same application as the previous example, only this time, we’ll specify our DFD components in an HCL file instead of writing a Go file.

provider "dfd" {

dot_path = "/path/to/my/dfd/dfd.dot"

}

resource "dfd_dfd" "webapp" {

name = "My WebApp"

}

resource "dfd_trust_boundary" "aws_cluster" {

name = "Amazon AWS West"

dfd_id = "${dfd_dfd.webapp.id}"

}

resource "dfd_process" "webserver" {

name = "Web Server"

dfd_id = "${dfd_dfd.webapp.id}"

trust_boundary_id = "${dfd_trust_boundary.aws_cluster.id}"

}

resource "dfd_data_store" "logs" {

name = "Logs"

dfd_id = "${dfd_dfd.webapp.id}"

trust_boundary_id = "${dfd_trust_boundary.aws_cluster.id}"

}

resource "dfd_data_store" "db" {

name = "sqlite"

dfd_id = "${dfd_dfd.webapp.id}"

trust_boundary_id = "${dfd_trust_boundary.aws_cluster.id}"

}

resource "dfd_flow" "weblogs" {

name = "TCP"

src_id = "${dfd_process.webserver.id}"

dest_id = "${dfd_data_store.logs.id}"

}

resource "dfd_flow" "app-data" {

name = "HTTP"

src_id = "${dfd_process.webserver.id}"

dest_id = "${dfd_data_store.db.id}"

}

resource "dfd_trust_boundary" "browser" {

name = "External Network"

dfd_id = "${dfd_dfd.webapp.id}"

}

resource "dfd_process" "client" {

name = "Client"

dfd_id = "${dfd_dfd.webapp.id}"

trust_boundary_id = "${dfd_trust_boundary.browser.id}"

}

resource "dfd_flow" "client-to-web" {

name = "HTTPS"

src_id = "${dfd_process.client.id}"

dest_id = "${dfd_process.webserver.id}"

}

resource "dfd_external_service" "google" {

name = "Google Analytics"

dfd_id = "${dfd_dfd.webapp.id}"

}

resource "dfd_flow" "analytics" {

name = "HTTPS"

src_id = "${dfd_process.client.id}"

dest_id = "${dfd_external_service.google.id}"

}

After creating a TF file, running terraform apply as usual will create a DOT file at the path you’ve specified in the configuration. And that’s it! You can now manage the lifecycle of your DFD the same way you’d manage any other resource with Terraform. For installation and usage information, you can refer to the README.

Feedback is welcome! We’d love to hear how you’re using these tools, what could make them better, and about any problems you run into. These libraries are in their early stages, but we are excited about their potential.

In addition to continuing to build out and refine this tool, the AppSec team at Marqeta is always looking for ways to be more efficient and automate the aspects of security that lend themselves to automation. It’s a core mission for our team because we believe it is how we’ll achieve security at scale, and further enable our product teams to build awesome, secure software. If you have a passion for solving these kinds of problems, please join us!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.